Clinical Researcher—September 2021 (Volume 35, Issue 7)

PRESCRIPTIONS FOR BUSINESS

Geoffrey Gill, MS

Clinical trials have become both increasingly expensive and less reliable as the focus of therapy development has shifted to managing chronic illnesses. Wearables and other digital technologies have the potential to transform clinical trials by allowing investigators to transition from using occasional—often subjective—measures of health, such as patient-reported outcomes, to continuous, objective measures, such as the patient’s level of activity or quality of sleep as measured by a wearable device. These metrics have the potential to be highly targeted and precise.

For example, stride velocity 95th centile measured at the ankle with a wearable device was recently accepted as a secondary endpoint for ambulant Duchenne muscular dystrophy patients by the European Medicines Agency.{1} Recognizing the potential of digital endpoints, the Clinical Trials Transformation Initiative (CTTI) released a series of recommendations, including a detailed flowchart showing how to develop endpoints, in June 2017.{2} These recommendations provide a clear path to developing digital endpoints.

The Quest for Qualification and Validation

Despite the potential benefit of digital endpoints and clear guidance on how to qualify them, getting even one endpoint accepted by regulators still requires significant work. The challenge comes from the fact that there are literally thousands of potential digital endpoints and more than 100 types of digital sensors, each with its own algorithms and outputs. Even different versions of the same device will often have different algorithms that generate different results.

There is also a need to validate endpoints on the patient population of interest, as validation on one patient group might not necessarily mean an endpoint will provide accurate outputs for another patient group. Therefore, it is very unlikely that a study team will find a prepackaged solution with a validated endpoint and sensor combination for a pathology of interest. In practice, this means that clinical trials study teams are faced with the choice of going through the entire validation process for their particular patient, sensor, and endpoint combination; capturing just an exploratory endpoint; or abandoning the effort altogether. Further, unless the sensor they use provides the raw data and the algorithm is available, teams will be tied to that particular sensor if they choose the exploratory endpoint alternative and want to use it in later trials.

Fortunately, using open-source algorithms can dramatically streamline this process. By using the V3 validation framework published by members of the Digital Medicine Society,{3} the validation process can be broken into three logically distinct steps:

- Verification – verifying that the sensor provides the right data.

- Analytical Validation – proving the algorithm converts the sensor data into a physical phenomenon, like steps, accurately.

- Clinical Validation – ensuring the physical phenomenon is a relevant clinical measure.

The first step, verification, depends only on the sensor. It should be performed by the manufacturer and should only need to be done once. Assuming verified data, both analytical and clinical validation depend on the algorithm. By using open-source algorithms, researchers can effectively share algorithm validation—no matter what sensor was used to generate the data—if the sensor went through the verification step.

The power of this approach can be demonstrated using atopic dermatitis (eczema) as an example. There are many potential endpoints of interest with this condition that could be measured using wearable sensors,{4} including:

- Scratching events per hour

- Number of scratching events

- Scratching duration per hour

- Total sleep time

- Wake after sleep onset

- Sleep efficiency

There are already open-source algorithms that address these endpoints. For example, Pfizer has developed software called Scratch.PY that calculates all these endpoints for nocturnal scratching, based on data from a wrist-worn accelerometer.{5} Pfizer has performed significant validation studies on this algorithm, and it is free for anyone to use. Researchers that employ Scratch.PY can build on this significant validation effort. Furthermore, if they publish their research, that strengthens the foundation for future researchers looking to validate endpoints for their studies.

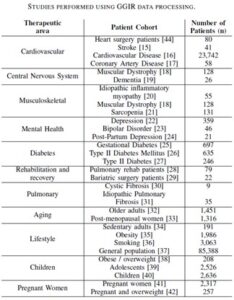

A great example of how a validation foundation can be built steadily over time is provided by an open-source package called GGIR, which generates a wide variety of activity and sleep endpoints from wrist-worn accelerometer data. More than 300 peer-reviewed papers have used GGIR, including more than 90 published in just the past year.{6} Hundreds of thousands of participants have been studied in a wide variety of therapeutic areas. Table 1 summarizes a small selection of the studies performed using GGIR to process the data.{7} No single organization could match this level and rate of research.

Table 1:

What’s more, all this validation material is available for any sensor that provides accurate accelerometry data. The GGIR research has used many different types of wrist-based accelerometers. By using open-source code, researchers are no longer tied to a single device and can leverage validation and user studies from a wide group of researchers.

The work that has been done to date is just a start for GGIR to achieve approval as a validated endpoint for a clinical trial.{6} However, once approval has been granted, any organization can potentially use that approval as evidence for its own trial—even if it uses a different device. Furthermore, the availability of a large amount of evidence from different therapeutic areas should make extending the validation to those applications significantly easier. Finally, consolidating around a de facto standard like GGIR will start to provide a consistent measurement to be integrated into medical practice.

The Consistency Conundrum

One cannot overstate how important consistent measurements across devices are to using digital medicine to help treat patients. It would be impossible for doctors to treat patients if every blood pressure monitor or thermometer relied on its own metrics for measuring these symptoms. Doctors need to establish consistent normal ranges and thresholds to know how to treat their patients.

Of course, it is relatively easy for different manufacturers to make their blood pressure monitors and thermometers comply to the standards without common algorithms, because they only measure one or two metrics taken at a single time. That is essentially the same as the verification step for digital sensors. Achieving consistency in digital measures without using a common algorithm, on the other hand, is far more difficult.

A major value of digital measurements is that they can monitor continuously over a long period of time to measure subtle changes in health in the real world. This facility is vital to accurately measure the progression of chronic diseases, which affect more than 50% of the U.S. population and represent more than 86% of the country’s healthcare costs.{8} However, this means that rather than measuring a single value at a point in time, huge amounts of data must be aggregated, consolidated, evaluated, and summarized.

Without common algorithms, achieving consistent results is effectively impossible. By embedding these algorithms in open-source software, it is possible to ensure consistent results from the start.

The Challenges

Although the benefits of using open-source algorithms appear quite clear, not all stakeholders in the industry support them. Some companies believe that they will be able to get their proprietary algorithms adopted and achieve monopoly profits as a result.

This approach faces immense barriers, not only because of the huge effort required to validate algorithms and the need for consistency across devices, but also because customers, regulatory bodies, and healthcare providers all want levels of transparency which are not generally available with proprietary algorithms. While some companies may succeed at keeping their algorithms to themselves, the majority of applications being developed by various firms will likely adopt common algorithms, if not open source.

Moving Forward

Clinical trial sponsors and regulators are starting to play a major role in this movement toward adopting open-source algorithms. Many of the leading pharmaceutical companies and other industry organizations are participating in the Open Wearables Initiative (www.OWEAR.org), which promotes and facilitates the use of open-source algorithms for clinical endpoints. OWEAR is also working on CTTI’s Novel Endpoints Project. In Europe, Mobilise-D is a major collaborative program involving industry, academia, and government to develop open-source algorithms for gait measurement.

More can be done. Sponsors and regulators should strongly encourage the use of open-source algorithms. Sponsors should share validation material and pre-competitive data more broadly. Regulators should encourage and facilitate this sharing as much as possible. Through collaboration, we can accelerate the adoption of these vital tools significantly and help patients live healthier lives.

References

- https://www.ema.europa.eu/en/documents/scientific-guideline/qualification-opinion-stride-velocity-95th-centile-secondary-endpoint-duchenne-muscular-dystrophy_en.pdf

- https://ctti-clinicaltrials.org/wp-content/uploads/2021/06/CTTI_Novel_Endpoints_Detailed_Steps.pdf

- Goldsack JC, Coravos A, Bakker JP, Bent B, Dowling AV, Fitzer-Attas C, Godfrey A, et al. 2020. Verification, analytical validation, and clinical validation (V3): the foundation of determining fit-for-purpose for Biometric Monitoring Technologies (BioMeTs). npj Digital Medicine 3 (article number 55). https://doi.org/10.1038/s41746-020-0260-4

- This example borrows heavily from work presented by Janssen’s DEEP initiative at several conferences. It is an illustrative example. It is not meant to be comprehensive and has not been validated.

- Mahadevan N, Christakis Y, Di J, et al. 2021. Development of digital measures for nighttime scratch and sleep using wrist-worn wearable devices. npj Digital Medicine 4 (article number 42). https://doi.org/10.1038/s41746-021-00402-x

- A partial list of GGIR references can be found at https://github.com/wadpac/GGIR/wiki/Publication-list.

- Gill G, Patterson MR. 2021. Verisense Validation According to the V3 Validation Framework. https://verisense.net/validation-v3-framework

- Chapel JM, Ritchey MD, Zhang D, Wang G. 2017. Prevalence and Medical Costs of Chronic Diseases Among Adult Medicaid Beneficiaries. American Journal of Preventive Medicine 53(6) Supplement 2:143–54. https://www.ajpmonline.org/article/S0749-3797(17)30426-9/fulltext

Geoffrey Gill, MS, is President of Shimmer Americas, leading the U.S. operations and the commercial efforts for North and South America for Shimmer Research, a designer and manufacturer of medical grade wearables.