Clinical Researcher—May 2019 (Volume 33, Issue 5)

PEER REVIEWED

Alexa Richie, DHSc; Dale Gamble, MHSc; Andrea Tavlarides, PhD; Carol Griffin

Assessing the capacity of a clinical research coordinator (CRC) is something that many medical research centers struggle with. Not all studies have the same needs or require the same level of support. What is an appropriate balance of minimal risk versus greater than minimal risk or complex studies? How can the complexity of a clinical trial be assessed in a consistent manner across varying disease types? Research leaders struggle with these questions and how to adequately staff their research units to prevent burnout or decreased quality of services.

Most research positions across institutions are extramurally funded, which presents challenges for not only immediate staffing needs, but makes getting to a state of predictive staffing nearly impossible. Over the last several years, Mayo Clinic Florida has gone through an iterative process to develop and refine a tool with the dual purpose of assessing the complexity of a clinical trial and, by extension, using that complexity assessment to determine the trial capacity of a research coordinator.

Background

The tool is based upon the widely available National Cancer Institute (NCI) complexity assessment,{1} which focuses on the key elements of hematology/oncology trials as they relate to number of study arms, complexity of treatment, data collection complexity, and ancillary studies. These elements were categorized as standard, moderate, or highly complex. The NCI focused primarily on community-based programs, and scored on tasks related to direct patient care interactions.{2}

In our local development process, we wanted a tool with a broad scope so that it could be applied to studies of all diseases and even to non-treatment trials. We used the NCI tool as the foundation, but modified it so that any clinical trial at Mayo Clinic Florida would be assessed for complexity in a consistent manner. Through this process, we were able to score biobanks, registries, expanded access situations, drug trials, and device trials in a uniform fashion.

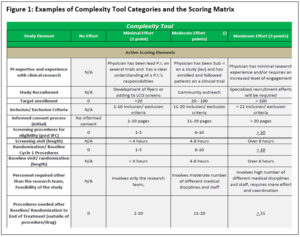

We considered all stages of running a study to determine overall study complexity. The first iteration of the tool was comprised of 21 unique elements each with a possible score of 0 to 3 points. For example, we included items scored on values such as recruitment strategies, principal investigator (PI) experience, screening procedures, number of visits, numbers of departments involved, frequency of monitoring, and activities at follow-up.

One of the elements assessed is the amount of data that must be collected by the local site. Data collection has a significant impact on how much effort will be required for the trial. A score of 3 would indicate that the data are complex and might need to be entered within three days.

Another example is the amount of time needed to complete a baseline/randomization visit. Minimal effort—a score of 1—would relate to any tasks in the baseline/randomization visit that occurred in less than four hours of total time. Baseline/randomization visits requiring greater than eight hours total time received a maximum score of 3.

Guidelines included in the tool provide instructions for what to do in cases such as when baseline and screening visits occur on the same day (only one value is scored 0 to 3 while the other is marked N/A). Figure 1 details how the scores can be applied to the categories. [See Richie_et al_Figures 1 and 3 for a PDF with images of Figures 1 and 3 that are easier to view.]

The highest possible score when adding up all 21 items is 63 points. The elements of the complexity tool relate to the overall study design, team engagement, target accrual, consenting processes, length of study, monitoring elements, billing requirements, and whether there are any associated ancillary studies.

The clinic’s Research Leadership team held several brainstorming meetings to refine the complexity assessment criteria upon which any study would be evaluated for content validity across cancer, neurology/neuroscience, and general non-cancer trials. These meetings occurred until we could ensure the tool could be applied consistently and comprehensively across all clinical trials. We then tested our tool on current protocols in all specialty disease areas, across Phase I through IV and pilot studies, observational registries or biobanks, and device trials by having multiple team members score a study to determine if they resulted in the same or similar scores based upon their interpretation of the protocol. Once content validity was established, we were ready to develop a standard for using the Complexity Tool as a predictive work load indicator for trial capacity of a research coordinator.

Developing a Standard

We began validating the tool in early 2017, with the Research Leadership team on the Florida campus scoring all active studies to date through December 2016 (n=~430). Each study received a complexity score. These scores were added to the overall portfolio management tracking tool in use at that time. The portfolio tracker lists studies by PI, Disease Type, and Coordinator, and was used to view the research activity within the disease or investigator’s study portfolio at any given time, including studies assigned to a specific research coordinator.

Using the Complexity Tool, the team was then able to review these portfolios to see what the cumulative complexity scores were for a designated group of staff or disease type (see Figure 2). In this versatile method, clinical trial portfolios could be evaluated for overall complexity through various views such as by PI, study coordinator, disease type, non-cancer vs. cancer trial, or clinical department. The scores varied depending on how the data were viewed, which led to further discussions on greater development and use of the tool.

| Figure 2: Total Complexity Scores for Team and Designated Staff | |||

| Study | Enrollment Status | Lead Coordinator | Complexity Score |

| Trial 1 | Open | CRC 1 | 42 |

| Trial 2 | Open | CRC 1 | 39 |

| Trial 3 | Closed to Enrollment – No Patients on Tx | CRC 1 | 4 |

| Trial 4 | Open | CRC 1 | 42 |

| Trial 5 | Open | CRC 1 | 48 |

| Trial 6 | Open | CRC 1 | 54 |

| Trial 7 | Closed to Enrollment – Has Active Patients | CRC 1 | 44 |

| Trial 8 | Open | CRC 1 | 50 |

| Trial 9 | Open | CRC 1 | 30 |

| Trial 10 | Open | CRC 2 | 43 |

| Trial 11 | Closed to Enrollment – Has Active Patients | CRC 2 | 43 |

| Trial 12 | Open | CRC 2 | 48 |

| Trial 13 | Open | CRC 2 | 50 |

| Trial 14 | Closed to Enrollment – No Patients on Tx | CRC 2 | 10 |

| Trial 15 | Closed to Enrollment – Has Active Patients | CRC 2 | 42 |

| Trial 16 | Closed to Enrollment – Has Active Patients | CRC 2 | 48 |

| Trial 17 | Closed to Enrollment – Has Active Patients | CRC 2 | 39 |

| Total Complexity Score for this Team: | 676 | ||

| Total Complexity for CRC 1: | 353 | ||

| Total Complexity for CRC 2: | 323 | ||

While at first glance there did not appear to be general logic or break points in the scoring, once the studies were grouped by disease type, there were obvious natural breaks in the scoring that could be used to delineate what could be considered a high-, moderate-, or low-complexity trial. For example, a trial score of 45 or higher on the tool was considered highly complex; scores of 30 to 44 moderately complex; and scores below 30 low in complexity.

Addition of a Step-Down Score

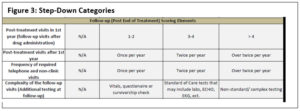

From the initial review and feedback, it was recognized that there are varying stages of work throughout the life cycle of a clinical trial. To accommodate the fluctuating needs or varying effort required, a step-down scoring process was incorporated. The initial score calculated is considered the trial’s overall complexity, and its use assumes the study is active and enrolling patients.

The first step-down score is to accurately assess the study needs once a trial closes to accrual. This step accounts for trials that may still have active patients being followed, but are no longer accruing new patients. To determine this first step-down score, the six elements that relate to enrollment and baseline assessments are subtracted from the overall complexity score.

The second step-down score is for trials that have closed to accrual and have no patients on active treatment. This score applies to trials that are in long-term follow-up only—for example, those assessing survivorship. To determine this score, only the last four elements in the complexity tool are applied for a total maximum score of 12 points (see Figure 3).

Through these three possible steps, a consistent and scalable method for assessing the complexity of any type of trial in a uniform way that accounts for the various stages of the trial was made possible, replicable, and scalable. This step-down score takes into consideration the complexity of each CRC, regardless of whether the study is accruing or only following patients.

Conclusion

In summary, the Research Leadership team was able to develop, test, and implement a complexity assessment that allowed for a uniform review of any clinical trial. Through its implementation, we were able to standardize what is considered highly or moderately complex.

After implementation, it was identified that further analysis could be completed to correlate the complexity score of a study with the workload capacity of a CRC. Further development of the tool was approved, and results of the next iteration of the Complexity Tool will be discussed in a subsequent publication.

References

- NCI Trial Complexity Elements & Scoring Model. 2009. National Cancer Institute/National Institutes of Health. https://www.google.com/url?sa=t&rct=j&q=&esrc=s&source=web&cd=1&cad=rja&uact=8&ved=2ahUKEwiclOqnnfPgAhXvrIMKHS9mCVQQFjAAegQICRAC&url=https%3A%2F%2Fctep.cancer.gov%2Fprotocoldevelopment%2Fdocs%2Feducational_slides_for_scoring_trial_complexity.ppt&usg=AOvVaw22cP4rP69rUxpVaHbJbBiF

- DOI:10.1200/JOP.2015.008920. 2016. J Oncol Pract 12(5):e536–47.

Alexa Richie, DHSc, is a Research Operations Manager at Mayo Clinic Florida.

Dale Gamble, MHSc, is a Program Manager at Mayo Clinic Florida.

Andrea Tavlarides, PhD, is a Research Supervisor at Mayo Clinic Florida.

Carol Griffin is a Research Operations Administrator at Mayo Clinic Florida.